As artificial intelligence reshapes economies, governance systems, and democratic processes, governments across the world are racing to regulate it. The European Union has opted for a highly structured, risk-classification model through its AI Act, while the United States continues to rely largely on market-driven innovation with limited federal oversight. India, however, has chosen a distinctly different path—one rooted in trust, flexibility, and contextual governance rather than rigid regulation.

This choice is not accidental. It reflects India’s political economy, its developmental priorities, and the realities of governing a diverse, high-scale democracy.

The Limits of Tight AI Regulation in a Developing Democracy

For countries like India, overly prescriptive AI laws carry real risks. Strict, technology-specific regulation can:

- Slow down innovation in a fast-evolving field

- Exclude startups and smaller firms that lack compliance capacity

- Import global regulatory models that do not reflect local realities

India’s digital ecosystem—ranging from public platforms like Aadhaar and DigiLocker to AI-enabled welfare delivery—operates at a scale unmatched globally. A one-size-fits-all regulatory approach would not only be impractical but potentially harmful to inclusion and access.

Instead of asking “How do we control AI?”, Indian policymakers are asking a more foundational question: “How do we build systems that people can trust while allowing innovation to flourish?”

Trust as the Cornerstone of AI Governance

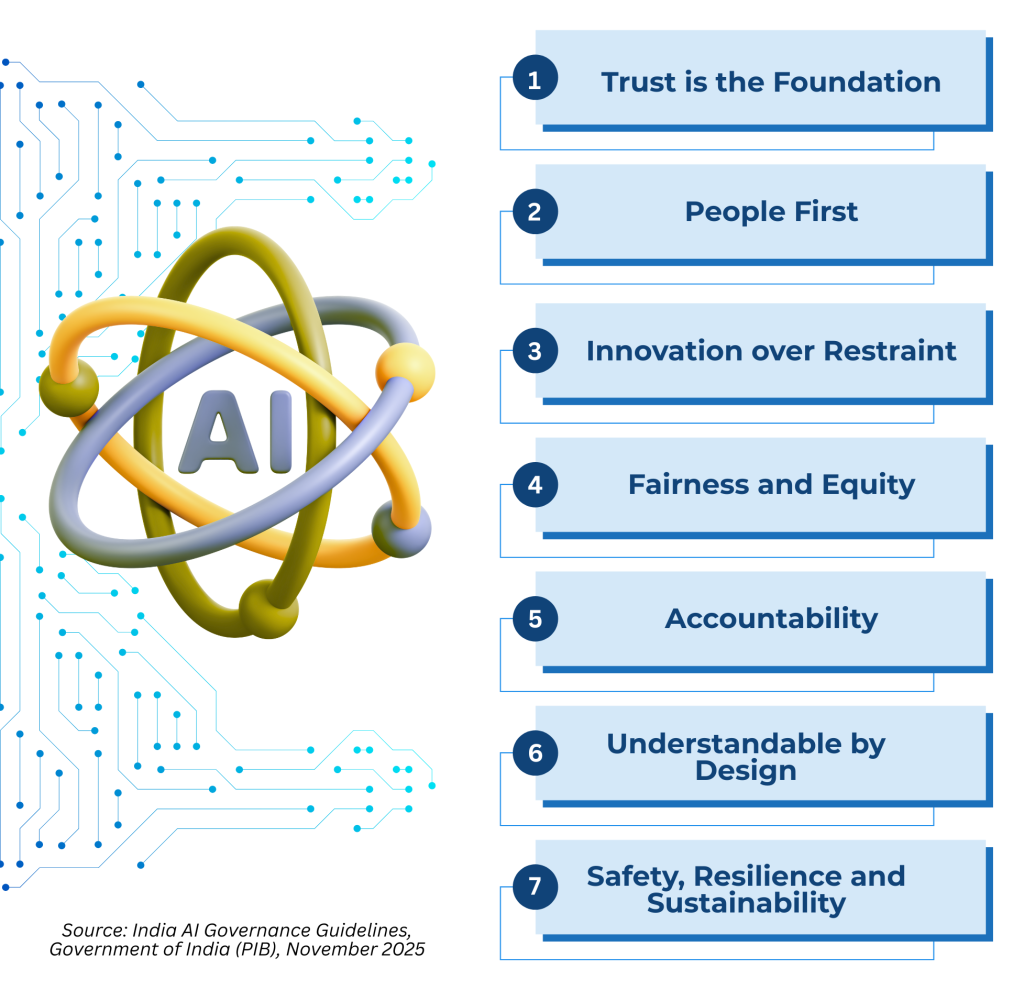

India’s AI governance framework places trust at the center—not as a vague ethical idea, but as an operational principle.

Trust in this context means:

- AI systems should be understandable and explainable where they affect citizens

- Accountability must be clearly defined across developers, deployers, and users

- Risks such as bias, misinformation, and misuse must be proactively addressed

Rather than regulating AI as a standalone technology, India focuses on governing outcomes—how AI is used, who it impacts, and what safeguards are in place when things go wrong.

A Principle-Based Approach Over Rule-Based Control

Instead of hard rules, India’s approach is anchored in broad guiding principles—human-centricity, fairness, accountability, safety, and inclusiveness. These principles provide direction without locking the ecosystem into outdated regulatory frameworks.

This approach allows:

- Regulators to adapt as technology evolves

- Sector-specific responses rather than blanket restrictions

- Innovation to continue while guardrails are gradually strengthened

Importantly, it acknowledges that AI risks vary widely—from harmless automation tools to high-stakes systems used in policing, welfare targeting, or elections. Governance, therefore, must be proportionate and context-specific.

Institutions Over Immediate Legislation

Institutions Over Immediate Legislation

Another defining feature of India’s strategy is its emphasis on institutional capacity rather than immediate new laws. Instead of rushing into legislation, the focus is on building:

- Dedicated governance bodies

- Risk assessment mechanisms

- Technical expertise within the state

Institutions such as an AI Safety body and inter-ministerial coordination mechanisms aim to continuously study AI risks, recommend safeguards, and guide policymakers. This allows regulation to evolve based on evidence rather than fear or global pressure.

Using Existing Laws, Not Reinventing the Wheel

India’s governance model also relies on existing legal frameworks—data protection laws, IT regulations, consumer protection, and criminal law—to address AI-related harms.

This avoids regulatory duplication and ensures that AI misuse is treated as a governance problem, not merely a technological one. Where gaps exist, targeted updates are preferred over sweeping AI-specific legislation.

A Development-First AI Strategy

At its core, India’s AI governance philosophy reflects its development priorities. AI is seen as a tool to:

- Improve public service delivery

- Enhance state capacity

- Reduce friction in welfare systems

Support economic growth and employment

Heavy regulation at an early stage could limit these benefits, especially in sectors like health, education, agriculture, and governance where AI adoption is still emerging.

By choosing trust and adaptability, India aims to shape AI as a public good, not just a commercial technology.

A Distinct Global Path

India’s model represents a middle path in global AI governance:

- Less restrictive than Europe’s compliance-heavy regime

- More state-guided than America’s market-first approach

This approach may prove especially relevant for other developing and middle-income countries grappling with similar challenges of scale, diversity, and institutional capacity.

Conclusion

India’s decision to prioritize trust over tight regulation in AI governance is both strategic and pragmatic. It recognises that in a rapidly evolving technological landscape, flexibility, institutional learning, and public trust matter more than rigid rulebooks.

As AI becomes more embedded in governance and daily life, India’s approach will be tested. But for now, it offers a compelling case for why governance does not always begin with regulation—and why trust can sometimes be the strongest safeguard of all.